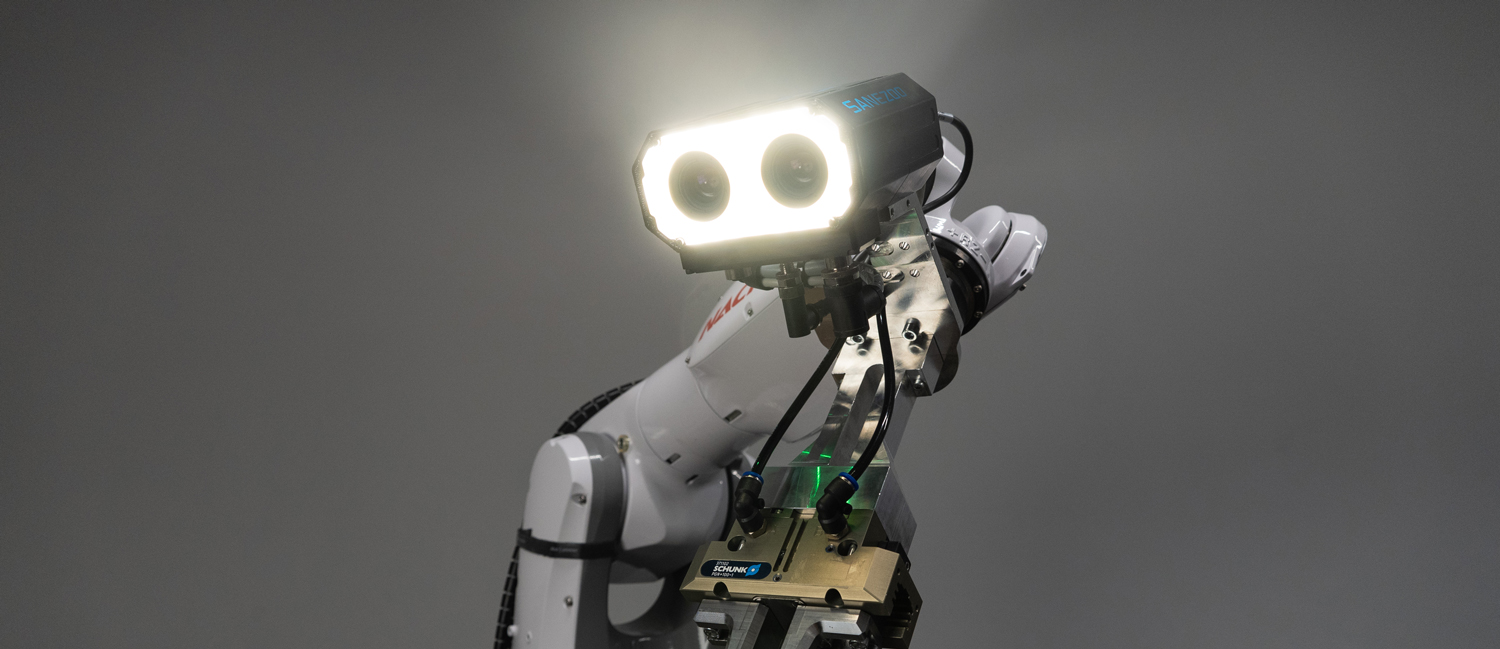

One of Sanezoo's products is the Grasp system for automatic 3D picking of parts from a box and feeding them into a line or machine. (Source: Sanezoo)

One of Sanezoo's products is the Grasp system for automatic 3D picking of parts from a box and feeding them into a line or machine. (Source: Sanezoo)

Exciting News: Sanezoo's Innovation Featured

in MM Industrial Spectrum Magazine!

The Brno-based company Sanezoo has made a name for itself among professionals in the field of automation and robotics over the six years of its operation. Still, most of us first heard about it only when it was awarded the gold medal at last year's International Engineering Fair for its intelligent visual quality control camera system. This award was received in Brno by the founders of the company – Lubos Brzobohaty, CEO, and Lubos Lorenc, Ph.D., CTO. At the turn of the year, the CEO visited us in the editorial office, and we had the opportunity to ask him a few questions.

Full version of the article by MM Industrial Spectrum magazine here:

Founder and CEO of Sanezoo Lubos Brzobohaty is visiting the editorial office of MM Industrial Spectrum magazine. (Photo: I. Heisler)

MM: How would you describe to our readers what your company does?

Lubos B.: My colleague and I founded Sanezoo more than six years ago to help people in manufacturing automate quality control. We started with camera systems. We thought it was a shame that people in manufacturing were doing tedious manual work, demanding a lot of attention and being prone to errors that negatively impacted manufacturing quality. People do make mistakes – they don't keep their attention, they think about something else, they can be indisposed and so on. Even with every effort, it is not in the human power to do such work with concentration and without error for several hours, not to speak of a whole work shift. Obviously, this is a task for robots. That's why we started developing a visual quality control system that helps manufacturing companies automate these activities. Right from the start, we found that a lot of tasks, such as visual inspection, measurement, and comparison, were already solved. However, the hard-to-scan (typically shiny) surface inspection had not been reliably solved yet. Industrial automation companies have resisted addressing these challenges because camera systems have problems with differently reflected light and also with various artifacts that the camera sees as deviations, even though these are visual differences, not functional defects. Different reflections or variations in the microstructure of the material surface, which the camera system evaluates as a change in shape or perhaps a defect when a specific combination of lighting and viewing angle is used, often cause false identification of the defect. It is not uncommon that inspection systems show so many false rejects that the manufacturer has to deploy additional people to validate the results of the system. Such a control system becomes useless. This is the challenge we have been focusing on and successfully solving, and not just with AI algorithms.

MM: In what way is your visual quality control system better?

Lubos B.: Our added value is that our system can address these difficult-to-distinguish deviations and reliably identify defective pieces from good ones. We have designed unique lighting systems for different materials and for different types of products. Our industrial lights are composed of a large number of independently controlled sections. By sequentially activating them, the system mimics a person who, for example, rotates a shiny object in the light in various ways to find a viewing angle at which he or she can detect the defect. We take several images of the part with different lighting parameters in quick succession and analyze these images. There are many similar cases in different areas of production – for example, parts made of shiny metal or plastic parts, painted parts or parts stored in blister packs. In such situations, conventional camera systems cannot handle the task. There are many applications on the market for which our solutions are suitable. In short, we are solving problems that manufacturing companies have yet to be able to solve – both in the area of quality control and in the part picking for the production lines. Most other operations are being successfully automated, but quality control and part handling remain the domain of manual labor. Even modern companies with a high degree of automation must rely on employees for these two activities. As many as eighty percent of the people who work in modern manufacturing are engaged in these two tasks. And we can automate these activities.

MM: Who are your clients, and how do you manage to attract new customers?

Lubos B.: Our systems are typically used in the automotive industry, either directly by automotive companies or by their Tier-1 and Tier-2 suppliers. Our typical partner is an automation and robotics system integrator or a line builder – a company that builds entire lines.

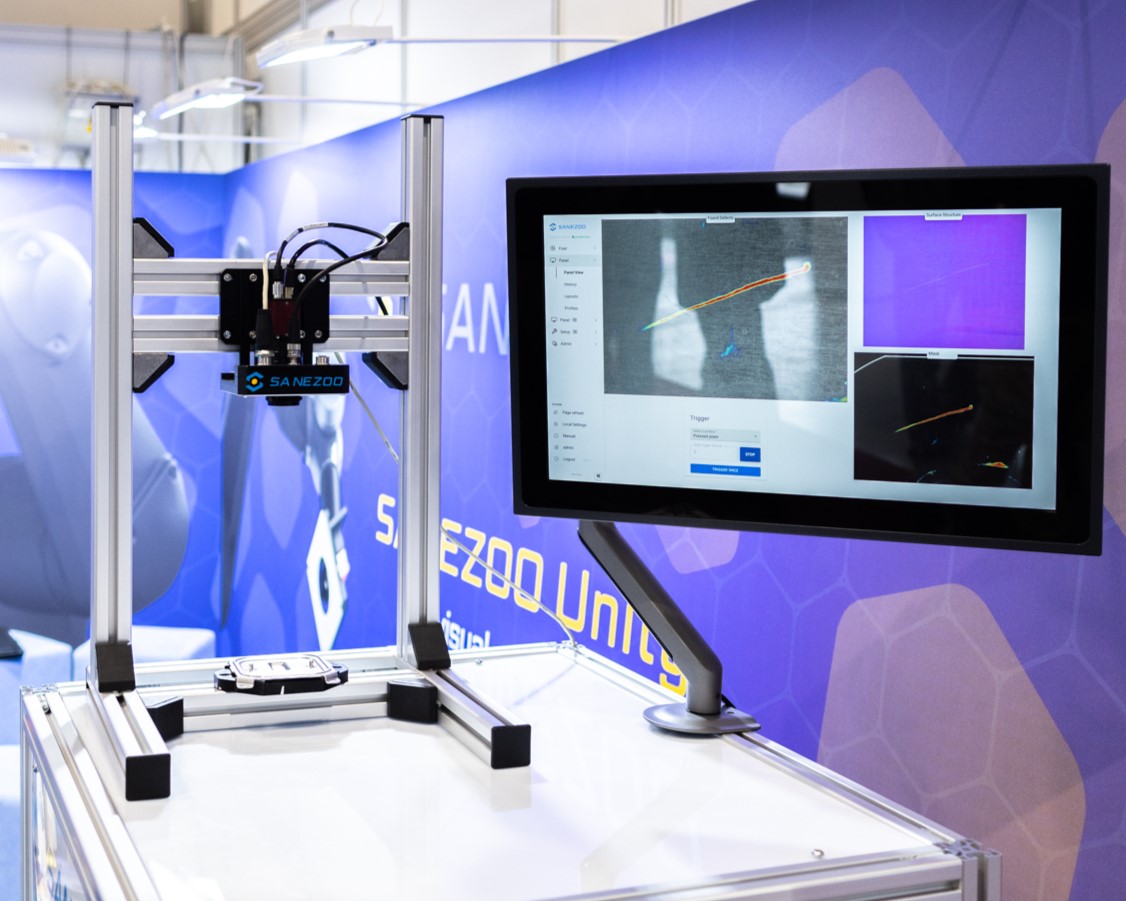

The Unity system is used for automatic visual quality control. It uses machine vision technology with a camera and special lighting, analytical algorithms and neural network at the same time. (Source: Sanezoo)

We visit manufacturing companies and ask them what their pains are. And when we hear that something can't be automated, we pay attention and offer solutions. That's how we find opportunities and gaps in the market. At first, no one knew us very well, but because we already have very compelling references, we're gradually reaching other large customers, and we can quickly show them that our solution will work for them. Customers tend to be skeptical at first because they often have experience with systems that don't work that well, even if they are products from large suppliers. That's our opportunity: if we show customers that it can work and that we can do the job, then they want to work with us.

Hardware, software and artificial intelligence go hand in hand

MM: What do you actually deliver to your customers? What does your visual inspection system consist of? Is it a piece of equipment, or is it mainly software?

Lubos B.: Our advantage lies in that we are not just a hardware-only company, nor just a software-only company, nor just an AI-only company, but that we connect all three of these areas. This makes us different from other solutions available on the market. In terms of hardware, the foundations of our visual inspection system are the camera, the aforementioned special lighting and the computer. Of course, the software is also an integral part.

The data for processing is generated by our cameras using our special lighting. Some pre-processing of the data may already occur in the camera, but the camera sends all the data to a device called a machine vision controller. This can be a server computer with a powerful graphics card (GPU). This machine runs a series of highly parallelized algorithms that process the camera data. These are both neural network inferences and analytical machine vision algorithms. It's all connected. We don't use conventional neural networks as they are known from other computer vision applications. These are our proprietary machine learning networks, which we call multi-channel because they process multiple channels at once. MM: What do you mean by multiple channels exactly? Lubos B.: While a conventional camera generates a single channel – a single image composed of black and white pixels at a given time, our system processes multiple images taken at different lighting conditions, surface texture, HDR (high dynamic range) images, glare-free images, and high-resolution images at the same time. All these data as individual channels enter simultaneously into both analytical algorithm processing and neural network processing. All of these components work together, which is why we call the whole system Unity.

One of Sanezoo's products is the Grasp system for automatic 3D picking of parts from a box and feeding them into a line or machine. (Source: Sanezoo)

MM: This is undoubtedly a vast amount of data that needs to be processed quickly. Where do the calculations physically take place? Where are the servers? Is it directly at the machines or at a computing center, or could it be in the cloud?

Lubos B.: In production, everything usually runs at the client's site, at their request, because they need continuous manufacturing data processing regardless of whether their internet connection or other infrastructure is working. Another common reason is the fear of cyber-attacks from outside or data loss or leakage. Large corporations usually have strict conditions regarding the possibility of external connectivity, and it is impossible to imagine transferring terabytes of data to a remote server and back, not to mention the extreme demands of such data traffic on the bandwidth capacity of local networks and Internet connections. We can place our machine vision controllers in their server rooms in case they have a fast local Ethernet network. Still, the client usually wants them right at the line or at the machine, just like a PLC or a robot controller. We can help the client remotely with setup, but their data is not sent anywhere.

Sanezoo was awarded the MSV 2023 Gold Medal for its Unity system for intelligent visual quality control in the category of Innovation in Automation Technology and Industry 4.0. (Source: Sanezoo)

MM: How does your system learn to detect defects?

Lubos B.: Conventional AI-based systems work by learning from defect-free components. You present them with a thousand good parts, and the AI learns them and reports a defect when it detects any anomaly. However, in manufacturing, too many factors change (tools wear out, materials change, etc.), and the inspection system must be reconfigured frequently to meet changing conditions. This costs time and money, and after several reconfigurations, users usually stop using such systems. Our approach is different. We understand the general properties of various surfaces and their categories of common defects, and our system knows them in advance. Therefore, the manufacturer does not have to prepare sets of „OK“ and „Not Good“ components because no product is unknown to our system in terms of surface quality control. We simply need to know which areas on the surface of a given product to inspect and the severity of each type of defect that can be accepted at that location for the product to pass.

Robotic parts handling

MM: So that was a visual quality control. However, in the introduction, you also mentioned the automation of parts picking. What solution do you offer for this task?

Lubos B.: Yes, I did. Although we started out with only camera systems and nothing to do with robots, we soon discovered that machine vision and robotic manipulation are related and are used together in production. It made sense to combine the two technologies. And yet, people involved in machine vision only know a little about robots, and vice versa. We're connecting the two worlds and putting them on the same platform. We developed a product called Grasp, a complete system for picking parts out of a box in 3D, called bin-picking, and putting them into a line or a machine. It can also perform automated visual quality control by our Unity product during part handling. Grasp uses passive stereovision – a pair of cameras mounted directly on the robot arm – and our lighting system, which makes the system suitable also for shiny surfaces.

MM: Do you develop and manufacture your products in the Czech Republic?

Lubos B.: Yes, the development is the work of our colleagues, of course, and we also manufacture everything we can in the Czech Republic. We manufacture our own lighting components and controllers directly and plan to manufacture our own cameras for the Grasp module. However, we do not have all the production in-house as we source many components. For example, we don't have our own machine shop, we don't make our own PCBs or do component placement ourselves. We source these components preferably from proven partners in the Czech Republic. Our company currently has more than thirty colleagues; if necessary, we hire additional external specialists for specific tasks.

out of the box and feeds them precisely into the designated place. (Source: Sanezoo)